Home>User and stakeholder involvement in realist evaluation: Interview with Ana Manzano (University of Leeds)

27.09.2023

User and stakeholder involvement in realist evaluation: Interview with Ana Manzano (University of Leeds)

Dr. Ana Manzano is an associate professor in public policy at the School of Sociology & Social Policy, University of Leeds. She is an expert in health policy and healthcare evaluation, focusing on theory-driven realist evaluation methodological approaches, advanced qualitative methods, and their application in mixed-methods studies. She has published widely in the area of quality standards for evaluation; and she is passionate about the relationship between methods, evidence at the intersection between health and social domains. She has worked on complex public policy issues such as maternal mental health, chronic illness, financial incentives in healthcare, user decision-making and healthcare utilisation.

She hosted a session as part of LIEPP’s METHEVAL cycle of seminars on June 8, 2023: User and stakeholder involvement in realist evaluation.

Could you briefly describe realist evaluation and the context in which it became more popular?

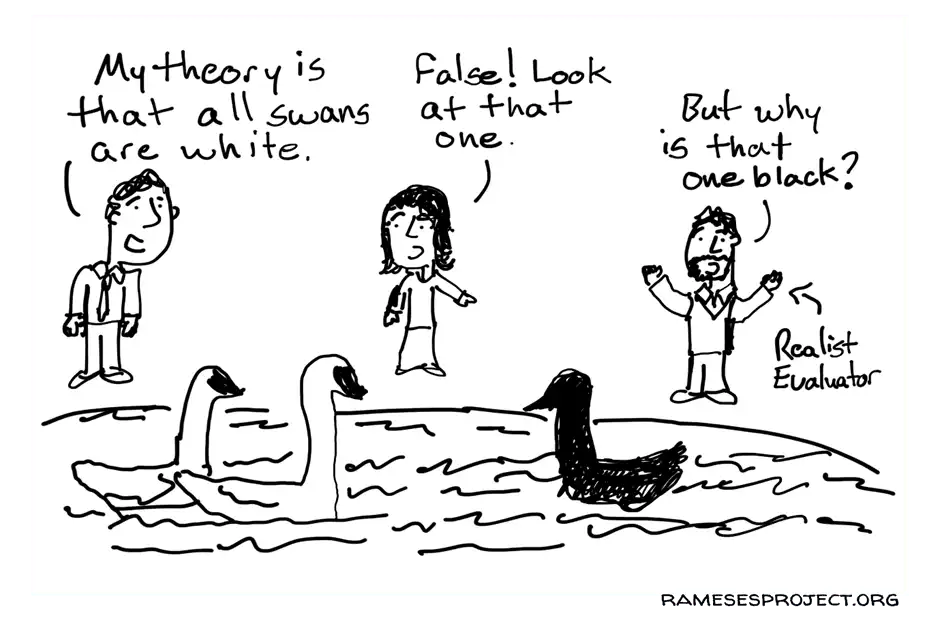

Realist evaluation is a theory-driven approach to policy evaluation that aims to understand causality and explain the intricate relationship between outcomes, mechanisms, and contextual circumstances at various levels (macro, meso, and micro) within a given policy, programme or intervention. It gained prominence in the early 21st century through the influential book "Realistic Evaluation" published by British academics and evaluators Ray Pawson and Nick Tilley in 1997. This evaluation approach gained traction within the evidence-based policy movement, which had expanded from the evidence-based medicine movement that emerged in the early 1990s.

In the United Kingdom, the then prime minister Tony Blair popularized an agenda that emphasized "what counts is what works." Likewise, the Obama administration in the United States prioritized evidence-based social policy. Realist evaluation emerged as an academic response to the traditional outcome-driven evaluation approaches that these political agendas relied on. These approaches often failed to acknowledge or examine the underlying mechanisms and contextual factors that influence the effectiveness and scalability of programmes.

Today, realist evaluation is a well-established method for evaluating complex programmes, policies, and interventions. It is recommended by the UK government and the UK Medical Research Council as a valuable approach in policy evaluation.

In practice, how does realist evaluation involve users and stakeholders?

Realist evaluation requires evaluators to have a deep understanding of the programme they are evaluating. To do this, it is crucial they engage with stakeholders who are knowledgeable about these programmes. Typically, realist evaluation projects do not aim to answer the evaluation question: “Does this programme work?” because this approach does not consider that programmes can ‘work’. Instead, programmes are understood to offer resources to people (users, frontline staff, managers and whoever the programme is addressed at) who choose to act on these resources that determine whether the programme works. Realist evaluation questions examine “How does this programme work? For whom? Under what circumstances? And why?”. To capture such answers, programme users and stakeholders are central for evaluators as they can help disentangle programme complexity and the role of context in generating programme results. That is: how the programme may work differently for different people, in different localities or institutions or at different points throughout its lifespan.

What are the limits of user involvement in evaluation, and more specifically in realist evaluation?

Involving users in research and evaluation is always a difficult and skillful task. People have busy lives with jobs and/or caring responsibilities and research is not their top priority. When they accept to be interviewed or to answer our surveys, they mostly do it out of altruism, although some people may also do it as part of their activism (e.g., raising awareness about social injustice). User involvement in evaluation is more than just being a participant in our data collection methods: people are asked to help evaluators for longer periods, at various stages of the projects, or even to do the evaluation themselves.

One of the key challenges is finding people who are willing to be involved at that level of commitment and continue for the duration of the evaluation project. Another challenge is to define who qualifies as a user. Figuring out who we are missing when we involve people in evaluation is critical to ensure that the evaluation captures the perspectives and experiences most relevant to the evaluation objectives.

Finally, achieving quality involvement of users in evaluation necessitates capacity building efforts (which is time consuming and costly). Users may require support and training to effectively engage in the evaluation process. In realist evaluation projects all those barriers remain, and other challenges emerge. For example, users are not likely to have heard before about the realist evaluation approach, so they may have to be trained in both the participatory approach to evaluation (get users to develop evaluation thinking) and the realist evaluation thinking (focusing on hows, whys and complex causality).

How could other evaluators use concepts of realist evaluation when it comes to the evaluation of public policies?

There are many realist evaluation concepts that can be useful to other evaluators, for example:

- Developing programme hypotheses (assumptions, also called “programme theories”) on how the policy is expected to work. This is always a useful exercise when laying the groundwork for any evaluation.

- Articulating underlying assumptions, using mechanisms, context, outcome configurations: how different contextual factors enable or hinder specific programme mechanisms to produce expected and/or unexpected results. It's all about understanding what influences what, who differently and why this is the case.

These concepts are not necessarily unique to the realist evaluation approach so experienced evaluators may already use them but in a more intuitive, less structured way.

During the spring of 2023, you were a visiting at LIEPP. How was your experience?

My experience as a visiting scholar at LIEPP was great and intellectually stimulating! The experience left a lasting impression since it offered a unique opportunity to broaden my intellectual horizons. LIEPP has a vibrant academic community, and I had the chance to interact with scholars from various disciplines. During my stay I also had the opportunity to participate as a discussant in the External Validity conference co-organised by LIEPP and the University of Maryland. And to present the first draft of a paper examining how users and stakeholders engage in realist evaluation studies. I enjoyed the chance to immerse myself in a different academic culture which I found enriching both personally and professionally. The potential for future fruitful collaborations arising from my visit adds an exciting dimension to the experience. And of course, the vibrant atmosphere of Paris added to the enjoyment!

An interview led by Ariane Lacaze.